When I heard that the "WWDC Scholarships" program existed, I was thrilled and decided to participate. I quickly knew that I wanted to do something with machine learning. I looked at the latest changes and came across CreateML. With CreateML you can create a model on a Mac, which can later be used with CoreML and Vision on macOS and iOS. I started the project during the Christmas holidays in December. I experimented with CreateML and CoreML and saw what you can do with it. First I recognized animals but then I started with handwriting recognition. Then I played around with the MNIST dataset and recognized some numbers. But since you shouldn't use public resources, I couldn't use MNIST. I decided to recognize numbers and operators to create a handwritten calculator. For this I needed as many images as possible for each character (0-9, +, -, *, /, =).

My first idea was to let the user draw each character, then train the model and use it directly. Unfortunately it is not very great to write with a mouse. Therefore I decided not to realize this idea.

The other possibility, which exists, is to train the model on a Mac, then copy the model to an iPad and recognize the handwriting there. I chose this option because it is better to draw on a touch screen and because the training can take a while and so it is better to do it in advance.

The first version I developed was a drawing surface, a "recognize" button and a label with the result. You could draw a number or an operator and then write it into the label.

Then I added a "Calculate" button. When this button was pressed, the calculation in the label was calculated and the result was displayed.

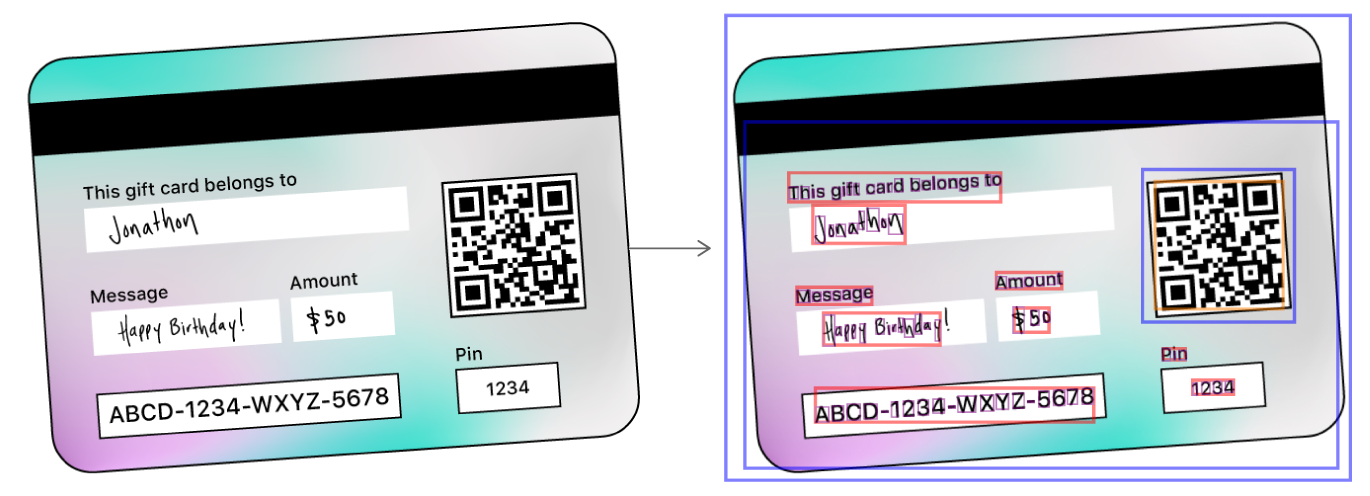

The first version worked, but the program was neither accurate in recognition, nor very performant, nor very user-friendly. Therefore I wanted to make some changes. I found out that with Vision I can not only classify the images, but on much more. Vision can recognize where there are texts in an image and where the individual letters are (see image below).